India/Global: New technologies in automated social protection systems could threaten human rights

Governments must ensure that automated social protection systems are fit for purpose and do not prevent eligible people from receiving social assistance, Amnesty International said today as it published a technical explainer on the technology underlying Samagra Vedika, an algorithmic system that has been used in India. State of Telangana since 2016.

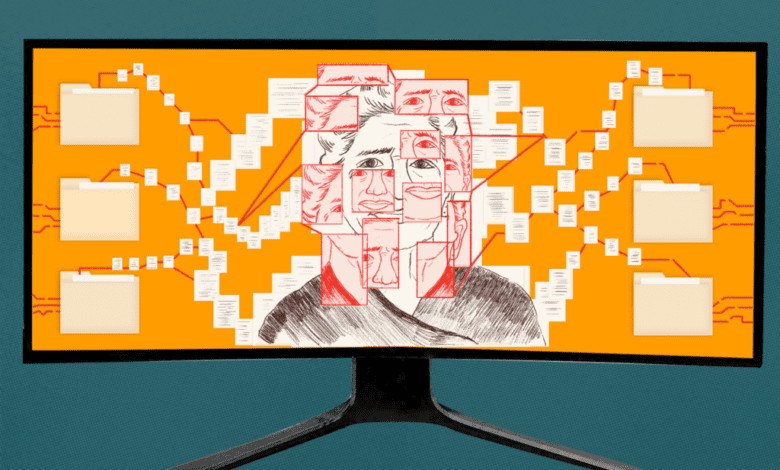

The technical explainer clarifies the human rights risks of Samagra Vedika and the use of a technical process called “entity resolution”, in which machine learning algorithms are used to merge databases, with the aim of assessing eligibility of social assistance applicants and detect fraud and duplicate beneficiaries in social protection programs.

Automated decision-making systems like Samagra Vedika are opaque and level people’s lives by reducing them to numbers through artificial intelligence (AI) and algorithms. In a regulatory vacuum and without transparency, investigating the impacts of such systems on human rights is extremely challenging.

David Nolan, Senior Investigative Researcher, Amnesty Tech

The publication of the technical explainer follows media reports blaming Samagra Vedika for allegedly excluding thousands of people from access to social protection measures, including those related to food security, income and housing. A 2024 investigation published in Al Jazeera exposed how errors in the system, which consolidates individual data from multiple government databases, led to thousands of families being denied vital benefits, raising serious human rights concerns around their right to social security. .

“Automated decision-making systems like Samagra Vedika are opaque and level people’s lives by reducing them to numbers using artificial intelligence (AI) and algorithms. In a regulatory vacuum and without transparency, investigating the impacts of such systems on human rights is extremely challenging,” said David Nolan, senior investigative researcher at Amnesty Tech.

The use of entity resolution represents a new class of wellness technology in which complex processes, often incorporating AI and machine learning, systematically compare pairs of records from individuals in large data sets to determine whether they are compatible or not. , to then evaluate the applicant’s eligibility according to various criteria and detect fraudulent or duplicate beneficiaries.

Amnesty International dedicated a year to designing – and attempting to carry out – an audit of the Samagra Vedika system. Despite these efforts, the audit remains incomplete due to challenges in accessing the underlying system and the general lack of transparency on the part of the developers and implementers of this system. However, in embarking on this process, Amnesty International discovered fundamental methodological insights and insights into the nascent field of algorithmic investigations. By sharing them, Amnesty International aims to increase the collective capacity of civil society, NGOs and journalists to carry out future research in this field.

“Governments must realize there are real lives at stake here,” said David Nolan.

“The external acquisition of these systems by governments from private companies increases the barrier for civil society and journalists to investigate the technical composition of digitalized social protection. As a result, the public and private actors responsible for designing and implementing these automated tools escape accountability, while the people affected by these systems are trapped in a bureaucratic maze, with little or no access to solutions.”

The case of Samagra Vedika in Telangana is emblematic of how governments are increasingly relying on AI and automated decision-making (ADM) systems to administer social protection programs. This tendency often leads to unfair outcomes for already marginalized groups, such as exclusion from social security benefits, without adequate accountability or transparency and redress.

It is imperative that all States carry out comprehensive human rights impact assessments before introducing technology into social protection systems. It is crucial that the introduction of any technology is accompanied by appropriate and robust human rights impact assessments throughout the entire lifecycle of the system, from design to deployment, and effective mitigation measures as part of a comprehensive procedure. of human rights due diligence.

Governments must understand that there are real lives at stake here.

Engagement with affected communities is essential and any changes to vital support systems must be communicated in a clear and accessible way. Ultimately, if a system is found to pose significant risks to human rights that cannot be sufficiently mitigated, it should not be implemented.

Bottom

The technical explainer independently follows and develops a investigation published in 2024 on Al Jazeera, in collaboration with the Pulitzer Center’s Artificial Intelligence (AI) Accountability Network. This investigation revealed a pattern of poor implementation in the Samagra Vedika system, resulting in the arbitrary denial of access to social assistance to thousands of people.

Amnesty International wrote to Posidex Technologies Private Limited – the private company that provides the entity resolution software on which the Samagra Vedika system is based – prior to the publication of this technical explainer. Amnesty International had not received a response by the time of publication.

In 2023, Amnesty International’s investigation, Trapped by Automation: Poverty and Discrimination in Serbia’s Welfare Statedocumented how many people, especially gypsies and people with disabilities, were unable to pay their bills, put food on the table and struggled to make ends meet after being removed from social assistance support following the introduction of Social Card registration.

In 2021, Amnesty International documented how an algorithmic system used by Dutch tax authorities had racially profiled child care benefit recipients. The tool was supposed to check whether benefit claims were genuine or fraudulent, but the system unfairly penalized thousands of low-income and immigrant parents, plunging them into exorbitant debt and poverty.