AI deepfakes of Rihanna and Katy Perry convinced some people they were at the Met Gala

No, Katy Perry and Rihanna did not attend the Met Gala this year. But that hasn’t stopped AI-generated images from fooling some fans into thinking the stars appeared on the steps of fashion’s biggest night.

Deepfake images depicting a handful of big names at the Metropolitan Museum of Art’s annual fundraiser quickly spread online on Monday and Tuesday.

Some eagle-eyed social media users spotted discrepancies — and the platforms themselves, like X’s Community Notes, were quick to note that the images were likely created using artificial intelligence. A clue that a viral image of Perry in a dress covered in flowers, for example, was fake because the carpet on the stairs matched that of the 2018 event, not this year’s green fabric lined with vivid foliage.

Still, others were fooled – including Perry’s own mother. Hours after at least two AI-generated images of the singer began circulating online, Perry reposted them to her Instagram, accompanied by a screenshot of text that appeared to be from her mother praising her for what she thought was a real appearance at the Met Gala.

“laughs mom, the AI got you too, BE CAREFUL!” Perry responded in the exchange.

Perry’s representatives did not immediately respond to The Associated Press’ request for further comment and information about why Perry was not at Monday night’s event. But in a caption on her Instagram post, Perry wrote, “I couldn’t make it to the MET, I had to work.” The post also included a muted video of her singing.

Meanwhile, a fake image of Rihanna in a stunning white dress embroidered with flowers, birds and branches also made the rounds online. The multihyphenate was originally a confirmed guest at this year’s Met Gala, but Vogue reps said she would not be attending before closing the carpet on Monday night.

People magazine reported that Rihanna had the flu, but representatives did not immediately confirm the reason for her absence. Rihanna’s representatives also did not immediately respond to requests for comment in response to the star’s AI-generated image.

While the source or sources of these images are difficult to detect, the realistic-looking Met Gala backdrop seen in many suggests that any AI tool used to create them was likely trained on some footage from past events.

The Met Gala’s official photographer, Getty Images, declined to comment on Tuesday.

Last year, Getty sued a leading AI imager, London-based Stability AI, claiming it had copied more than 12 million photographs from Getty’s stock photography collection without permission. Getty has since launched its own AI imager trained on its work, but blocks attempts to generate what it describes as “problematic content.”

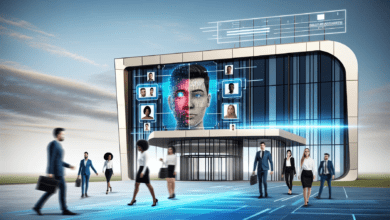

This is far from the first time we’ve seen generative AI, a branch of AI that can create something new, used to create fake content. Image, video and audio deepfakes of prominent figures, from Pope Francis to Taylor Swift, have gained a lot of traction online before.

Experts note that each case highlights growing concerns around the misuse of this technology – particularly with regard to disinformation and the potential to carry out fraud, identity theft or propaganda, and even election manipulation.

“Once seeing was believing, now seeing was disbelieving,” said Cayce Myers, professor and director of graduate studies at Virginia Tech’s School of Communication — pointing to the impact of Perry’s AI-generated image on Monday. . “(If) even a mother can be fooled into thinking the image is real, that shows the level of sophistication this technology now has.”

While using AI to generate images of celebrities in lavish make-believe dresses (which are easily proven to be fake at a highly publicized event like the Met Gala) may seem relatively harmless, Myers and others note that there is a well-documented history of more serious or harmful uses of this type of technology.

Earlier this year, fake sexually explicit and abusive images of Swift, for example, began circulating online — causing X, formerly Twitter, to temporarily block some searches. Victims of nonconsensual deepfakes range far beyond celebrities, of course, and advocates emphasize particular concern for victims who have little protection. Research shows that explicit AI-generated material overwhelmingly harms women and children – including disturbing cases of AI-generated nudes circulating through high schools.

And in an election year for several countries around the world, experts also continue to point to potential geopolitical consequences that misleading AI-generated material could have.

“The implications here go far beyond the security of the individual — and really touch on things like the security of the nation, the security of the entire society,” said David Broniatowski, an associate professor at George Washington University and the study’s principal investigator. Institute for Trustworthy AI in Law and Society at school.

Utilizing what generative AI has to offer while building an infrastructure that protects consumers is a difficult task – especially as commercialization of the technology continues to grow at such a rapid rate. Experts point to the need for corporate accountability, universal industry standards and effective government regulation.

Tech companies are largely calling the shots when it comes to governing AI and its risks, as governments around the world work to catch up. Still, notable progress has been made over the past year. In December, the European Union reached an agreement on the world’s first comprehensive AI rules, but the law will not come into force until two years after final approval.